The $20 AI Subscription That Costs $500 to Deliver

The Hidden Economics Haunting AI Companies

A single Claude Code user consumed $150,000 worth of AI compute in one month. Their subscription fee? $200.

There is even a name for them: Inference Whales

This isn't an outlier. This is how AI subscription businesses are breaking in real time.

Pursue the mission at all costs

The major frontier models are following identical playbooks. Price aggressively to capture users early. Bank on underlying infrastructure costs dropping rapidly.

When the mission is somewhat altruistic, it’s easier to justify the aggressive costs and means.

Also, it doesn’t help when Google’s stock increases when they simply announce an increase in AI investment, which was historically a penalty by public markets.

Do Cheaper Old Models Matter?

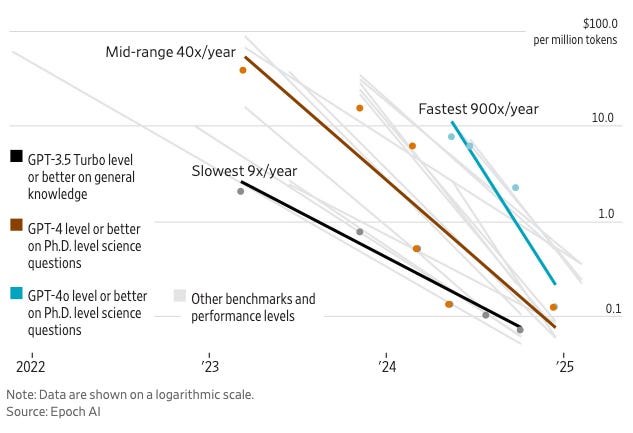

We’ve been hammered by all the research firms that costs will decrease dramatically and it’s true but it paints an incomplete picture.

(Source: WSJ: Cutting-Edge AI Was Supposed to Get Cheaper. It’s More Expensive Than Ever.

Most users consistently migrate to the highest-performing model available. When GPT-4 launched at premium pricing, adoption was immediate despite cheaper alternatives existing. When Claude Opus released, users switched again regardless of cost differences.

Now, GPT-5 has released 10x cheaper than Opus with matched quality. There’s very little logic to the pricing. It’s clear OpenAI is making an aggressive move on Anthropic and they’re betting on the router capabilities of GPT-5 to optimize the token consumption because it’s consuming tokens at exponential volumes.

Users want the smartest AI available, not the cheapest AI from previous generations. The models are improving so much that it doesn’t make sense to not move.

Sure, a consumer doesn’t need to always switch but an enterprise? You need the advanced reasoning to make agentic workflows actually work.

Inference Consumption Has Exploded

The biggest multiplier is inference consumption.

Early AI applications returned brief, focused responses. Current systems perform multi-step reasoning, extensive research, iterative refinement, and complex analysis before delivering results.

Token usage by task complexity:

Basic chatbot Q&A: 50 to 500 tokens

Short document summary: 200 to 6,000 tokens 🤯

Basic code assistance: 500 to 2,000 tokens

Writing complex code: 20,000 to 100,000+ tokens

Legal document analysis: 75,000 to 250,000+ tokens

Multi-step agent workflow: 100,000 to one million+ tokens

A simple business task of summarizing a document has grown 6X in token consumption! I haven’t met a professional who doesn’t use AI for this use case.

Modern AI delivers superior results by performing significantly more computation per request. Each capability improvement increases the token cost per task. Inference is growing faster than imaginable.

Google said it had processed 480 trillion monthly tokens in May 2025, and that figure has now doubled to 980 trillion monthly tokens by July. Source

The Market's Impossible Dynamics

Companies understand the economic solution. Charge based on actual resource consumption. They also understand this approach eliminates competitive advantage.

While some companies implement usage-based pricing, venture-funded alternatives offer unlimited access at $20/month. Users consistently choose unlimited options.

Market forces create a destructive cycle:

Honest pricing loses customers to subsidized competitors

Subsidized pricing eventually exhausts funding sources

Companies with longest runway capture market share

Market leaders face identical underlying economics

The entire industry converges on the same unsustainable position. Burning capital to acquire users while assuming costs will improve.

The Industry Reckoning

Current AI subscription businesses serve their best customers at significant losses. Heavy usage drives the worst unit economics. Growth metrics obscure deteriorating financial fundamentals.

Companies can continue assuming that cheaper AI infrastructure will rescue unsustainable pricing models. Or they can acknowledge that consumption growth consistently outpaces cost reductions.

Survivors will implement honest pricing from their earliest stages. The rest will provide expensive case studies for future entrepreneurs.

The subscription model crisis has begun. Market position matters less than unit economics.

What does this mean for AI Startups?

It means LLM optimization is mission critical. It would be irresponsible to not be optimizing models for cost and performance - even if your current LLM costs are low.

It’s easy to point at the whales and say that’s not us.

But models are only improving, which means inference is increasing astronomically. Reasoning still has a long ways to go, and continuous learning is only going to pour gasoline on the fire.

You don’t want to sit here and say you’ll stick with older models because what if the next thing can transform your product?

Optimizing models based on the job to be done would allow you to leverage the best for the hardest job, and have confidence the easy jobs are downgraded to cheaper models when necessary.

It’ll help you sleep better at night.