Newsletter: The Missing Link Between Agents & Business Results

Plus OpenAI DevDay and the State of AI

Brixo latest

We met with a company that built a customer-support agent.

They said: Engineering was thrilled about it’s performance— the agent handled 60% of tickets.

Then, they (leadership) asked them:

“Is it actually improving customer experience?”

“What’s it costing us per conversation?”

“Which flows are breaking or frustrating users?”

No answer.

All the answers exist somewhere in the messy telemetry data, but engineering doesn’t have a view in traditional AI Observability tools, which leads to a tedious and unscalable investigative process.

To find an answer, someone has to ping engineering, who has to query logs, export JSON, then try to translate it into business speak.

By the time insights reach leadership, they will be outdated and incomplete.

This is the new gap forming:

Agent telemetry is technical. Business decisions are not.

Brixo bridges that gap.

We take the messy trace data that only engineers can read and turn it into clean, explainable business views:

Cost per run

ROI trends

Sentiment & customer impact

Breakdown of what’s actually working (and what’s not)

So when the next exec meeting comes up, the answer isn’t “let me check with engineering.”

It’s “here’s what our agents are doing, here’s the ROI, and here’s how to make them better.”

What stood out

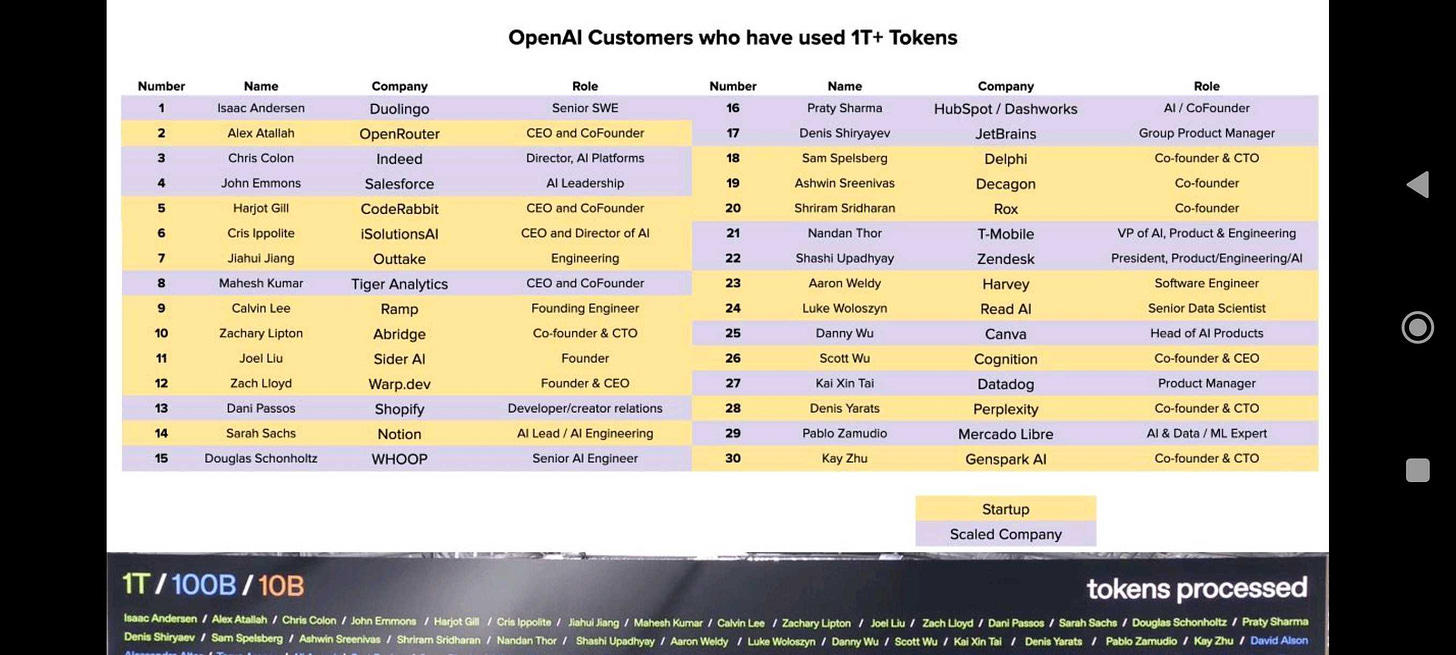

This week was OpenAI’s DevDay. They shared staggering statistics about the adoption of the OpenAI API.

6 billion tokens per minute are being processed (up from 300 million).

Some developers in the room had processed over 1 trillion tokens on OpenAI’s platform.

Let that sink in.

What we read/listened/watched

The AI economy is entangled with itself

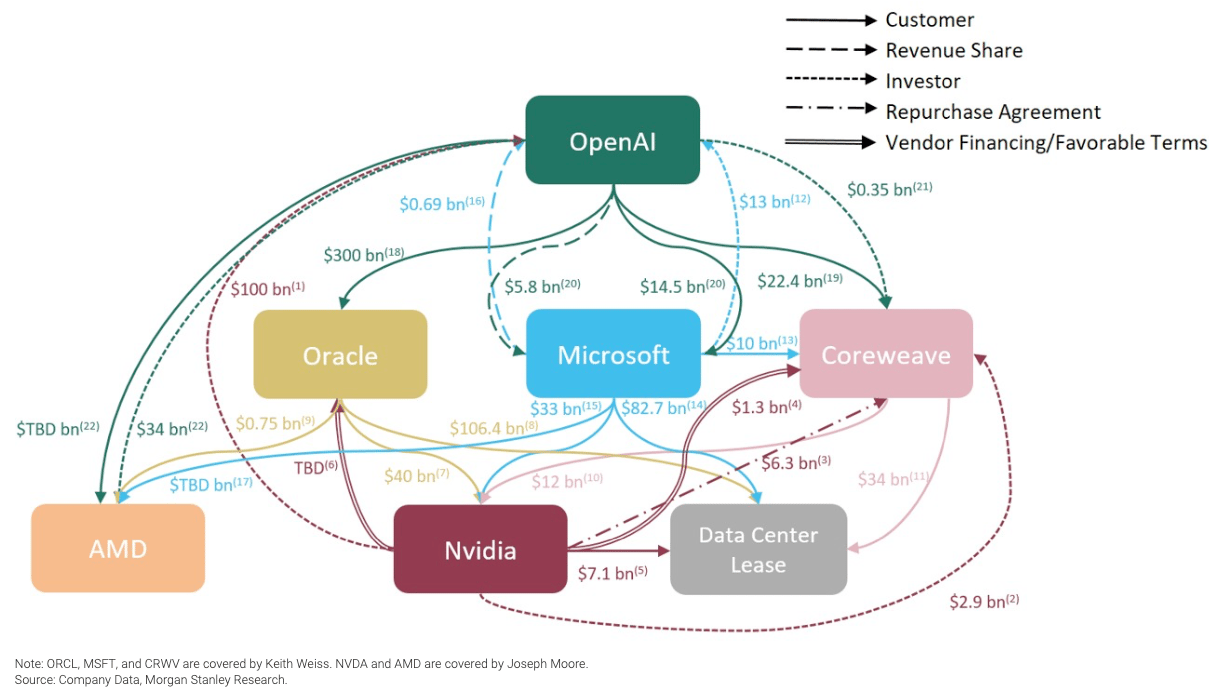

Phil Rosen provides a great breakdown of the latest OpenAI AMD deal in context of their other groundbreaking deals. (His content is worth a follow)

Sam Altman on the a16z pod with Ben Horowitz

Nathan Benaich from Air Street Press provides a phenomenal 25min breakdown of the State of AI

Link to Video highlighted in screenshot below

Final Brick

Making sense of the OpenAI web

At first glance, the web of deals around OpenAI looks circular — money, chips, and contracts flowing in loops between the same few players. But it’s actually a layered supply chain, not a financial merry-go-round.

NVIDIA supplies the chips that power OpenAI’s models.

OpenAI, in turn, rents compute from Oracle and CoreWeave — who buy their GPUs from NVIDIA and finance those purchases through their own vendors.

Microsoft sits at the top as both OpenAI’s biggest shareholder and one of its largest customers, embedding the models across its products.

AMD’s recent $34 billion warrant deal adds another dimension — a bet that OpenAI will deploy 6 GW of its chips over time.

Each company is staking capital on a different layer of the same infrastructure: NVIDIA on hardware, Oracle on capacity, Microsoft on application scale, and AMD on entry.

While it appears circular — it’s vertical integration in real time, built around the single most valuable bottleneck in technology: compute.

Appreciate the shout!