Newsletter: Is AI Funny?

From model routing to AI PMs—plus why humans and AI both fail spectacularly at getting the joke

Welcome to the latest Brixo Newsletter!

Brixo Latest

We’re continuing to work with new clients to provide a LLM assessment, where we evaluate the LLM production stack for improvements based on the 10 points outlined below:

Prompt efficiency

Long or redundant prompts can inflate costs and confuse the model. By tightening structure and wording, we reduce tokens while improving clarity, which lifts accuracy. This means lower spend and more consistent outputs without any model changes.

Token optimization

Many workloads pass unnecessary background text, system prompts, or long contexts. We analyze token distribution and identify trimming opportunities. Customers benefit from lower costs and faster response times, while preserving quality through smarter context management.

Caching

If the same or similar queries appear often, we enable both literal caching (exact match) and semantic caching (similar match using embeddings). This dramatically reduces repeated inference costs and improves latency, while still returning high-quality results.

Decoding tuning

LLM performance depends heavily on decoding parameters. For example, lowering temperature can reduce hallucinations, while tuning top-p/top-k controls creativity and determinism. By dialing in the right settings per workload, we deliver outputs that are both cheaper and more reliable.

Model parameterization

Not all tasks need a large, expensive model. Sometimes a smaller or instruction-tuned model performs just as well (or better) for certain workloads. We benchmark across variants to route work to the most efficient option, reducing cost while maintaining quality.

Context management

Many teams overload prompts with irrelevant or redundant context, which increases costs and risk of truncation. We analyze usage and recommend retrieval-augmented generation (RAG) or smarter truncation strategies. This yields leaner, more focused inputs and higher fidelity outputs.

Segmentation / Workload routing

Different task types behave differently. Summarization might work best on a fast, mid-sized model, while extraction benefits from high-accuracy ones. By segmenting your traffic, we route each task to the right model automatically, maximizing efficiency per workload.

Failure taxonomy

Errors aren’t random. Some may be hallucinations, others formatting issues, missing fields, or irrelevant answers. We classify and quantify these patterns, so we can directly address root causes with prompt changes, guardrails, or routing adjustments. This reduces business risk and error handling costs.

Guardrails

Beyond accuracy, customers need safe and policy-compliant outputs. We detect hallucination risk, toxicity, bias, and compliance violations. Then we layer in filters, schema enforcement, or fallback logic to keep outputs safe and aligned with business requirements.

Cost/latency breakdowns

We analyze where your spend and response delays are concentrated. This shows you exactly which workloads or patterns are driving cost and latency spikes. By focusing optimization on those hotspots, we deliver tangible and immediate ROI.

Next Steps:

Most AI teams don’t have the resources to consistently monitor their stack so we offer both an assessment and a product suite to ensure systems are operating at a high level and at the right investment. If this strikes your interest, let us know.

What stood out

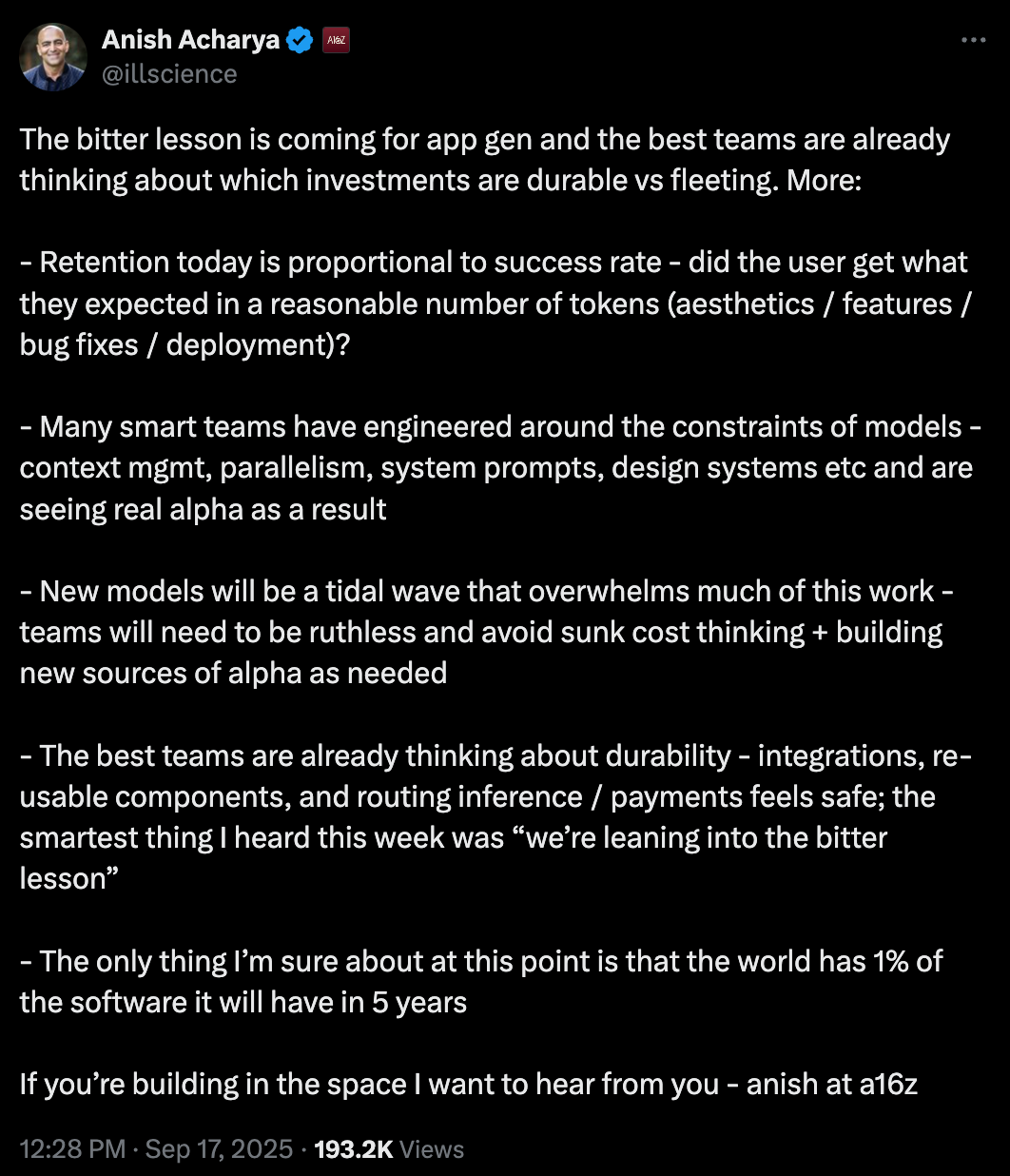

Anish Acharya from A16z had this to say about the challenges facing “AI App Gen” companies:

This is consistent with the theme over the latest few weeks about “AI App Gen” startups getting squeezed on pricing.

What we read/listened/watched

From the Brixo team:

OpenAI’s research on AI models deliberately lying is wild

The MEGA AI Handbook for AI Product Managers

This is comprehensive guide on “AI Eval FAQ” for AI PMs.

It’s symbolic of the current AI Stage. This is complicated new terminology for a job role that didn’t exist 2 years ago. People will need to learn new skills while AI matures to more user-friendly solution.

Final Brick

Did you know AI has a sense of humor?

In a recent study on humor detection—spotting punchlines in stand-up transcripts—top LLMs scored about 51% accuracy, barely above humans at 41%.

Both sides missed the mark often, since humor depends on timing, tone, and context that transcripts strip away.

The takeaway: when AI seems “funny,” it’s probably more luck than wit, and humans, well, “getting the joke” is still a challenge for most.