Brixo Newsletter: LLM Costs & "Inferentia"

Our weekly newsletter containing updates, interesting content and one final thought on the history of "inference"

Welcome to our first newsletter 🙂

Our goal is to share updates on Brixo, and lessons from our journey building an AI native company.

Brixo Update

While building our recent startup (pre-Brixo), we hit a familiar wall: LLM costs spiraling while output quality declined. So we focused on solving that problem.

We built tooling to cut our inference costs significantly while improving output quality. We focused on real-time cost visibility, automated quality evaluation, and rapid iteration across prompts, models, and workloads.

Now it's packaged up for other teams facing similar challenges.

We’re looking to connect with teams spending on LLM inference who want to get view into costs / simplify their stack without sacrificing quality.

Whether engineering leaders, ML/AI teams, or Ops/Finance folks managing inference budgets, we’ve been able to consistently deliver 2-3x more value from existing LLM spend.

If you know someone with growing LLM costs, We’d love an intro.

(We're offering free assessments to show exactly where costs can be cut and quality improved.)

Also at Brixo:

We crossed the threshold this week by announcing Brixo to the digital world! The website is entirely “vibe-coded” and we’ll share a breakdown on how we did it, next week.

What stood out this week?

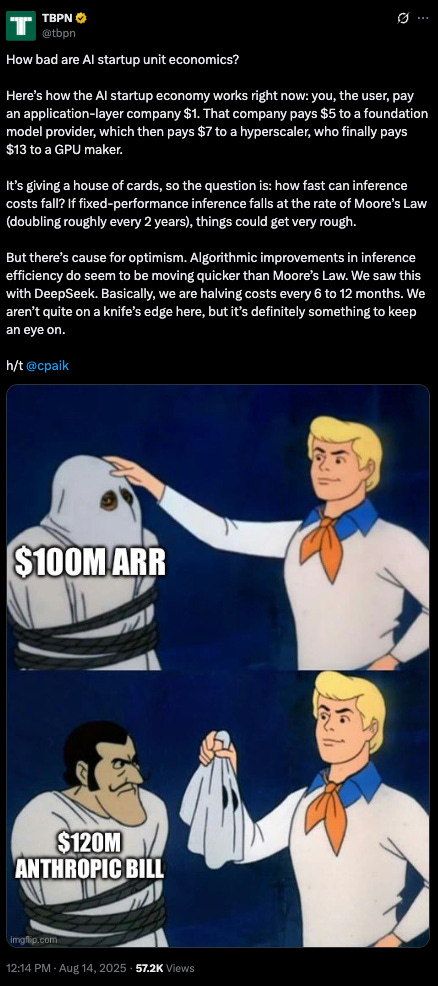

We’re betting on a world where businesses (not just engineers) will need to have visibility into their LLM/AI cost, performance, and need to scale cost optimization techniques.

The hidden truth behind LLM costs

What we read/listened/watched:

Becoming an AI PM from Lenny’s Podcast.

This provided a few insights into the future of the Product Manager role. We believe the PM role is going become mission critical as they are the gateway (or bottleneck) between engineering teams building LLM powered features, and the quality of the results for customers.

Marketing in the Age of AI with Wiz’s CMO Raaz Herzberg

Some great insights into bringing AI into a bigger company.

If you prefer to read, here’s a great summary.

Bret Taylor of Sierra: How to sell to Enterprise Companies as an AI Startup

Incredible interview from someone worth following.

He was also recently on Lenny’s Podcast.

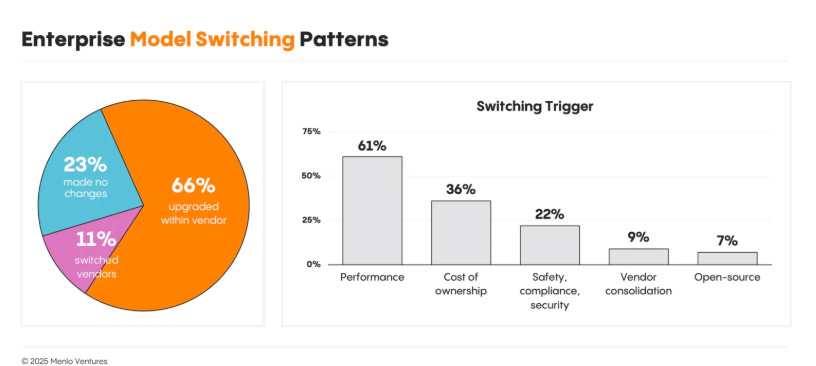

2025 Mid-Year LLM Market Update: Foundation Model Landscape + Economics

A great data-driven update on LLM Market. This chart is particularly interesting and supports what we’ve been learning - Today, enterprises are continuing to upgrade to the newest models.

We all need a reminder to write like humans more often 😇

The Final Brick

We hear the term “inference” a lot when talking about LLMs.

But what does it actually mean and where does it come from?

The word dates back to the late 16th century, from medieval Latin inferentia, derived from inferent (“bringing in”) and the verb inferre.

It entered the computing vernacular in the 1960s with early AI research.

Inference is the process of reaching a conclusion using evidence and reasoning rather than direct statements.

For example, if you see someone in a raincoat, rain boots, and carrying an umbrella, you might infer it’s raining. But you don’t know that, just like an LLM doesn’t know what color socks you’re wearing right now.

So the next time you get an answer from an LLM, remember, it’s making its best guess, not looking at your socks reading your mind.